II.4.3 Measuring Emotions from Voice

The tone of the human voice is a primary carrier of emotions. To recognize emotions from voice, the voice audio files first have to be broken into segments and preprocessed. Towards that goal, voice activity has to be detected, which for instance can be done using the WebRTC Voice Activity Detector API from Google. In this step also phases of silence are recognized and removed. As a last pre-processing step, the audio is converted to a standardized 22.050Hz sample rate with 16 bits per sample. To find emotions in human speech, mostly spectral or prosodic features – relating to rhythm and intonation – are used. The most popular spectral feature set for voice emotion recognition is the Mel Frequency Cepstral Coefficient (MFCC), which can be interpreted as the representation of the short-term power spectrum of the speech signal. For instance, the popular Librosa Python library includes MFCC, applying a Fourier transform to decompose a speech signal into its individual frequencies and the frequency’s amplitude, thus converting the signal from the time domain into the frequency domain. The result of this Fourier transform is called a spectrum. The y-axis of the spectrogram is finally mapped to the Mel scale, which splits the pitch into equal distances that sound equally distant to a human listener.

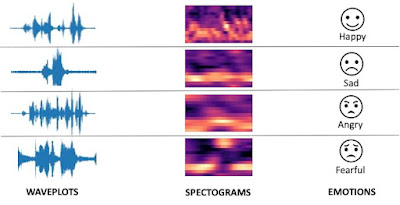

Figure 42. Voice Emotion Recognition Process

MFCC is particularly well suited for voice emotion recognition, as it takes human sensitivity towards frequencies into consideration by using the Mel scale. Figure 42 illustrates the process of how the waveplots are converted into spectrograms, which are then mapped to emotion using a machine learning model that has been trained with pre-labeled voice emotion datasets.

Good results have been reached with 26 MFCC features calculated with a time window of 30ms. To train the model, datasets like RAVDESS are used. RAVDESS contains pre-labeled speech segments where actors speak a sentence in any of the six emotions happy, sad, angry, fear, disgust, and surprise. The RAVDESS segments can then be used for both training and testing a Convolutional Neural Network (CNN) or a Long Short-Term Memory (LSTM) recurrent neural network (RNN), recognizing a particular emotion with about 70% accuracy.

We have used our Voice Emotion System for instance to recognize emotions in face-to-face in-person meetings from voice, and in a theater play.

Comments

Post a Comment